Penpot's AI whitepaper

This piece explains some of Penpot's relevant findings around AI and UI Design, what we’re building (and why) and what you should expect from us in the future.

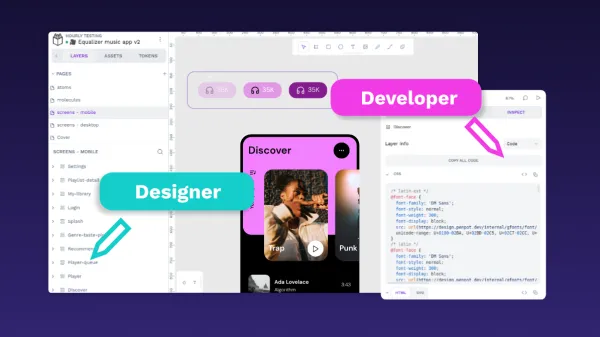

[Landed here without prior knowledge on Penpot? We are the web-based open-source design tool that bridges the gap between designers and developers]

Some of our core beliefs around how design and code should work together have proven to be non-negotiable for the future of the software industry as a whole.

Today, we feel we are ready to share some of our relevant findings around AI and UI Design, what we’re building (and why) and what you should expect from us in the future.

Even if you are not a software builder or use Penpot on a daily basis, we hope that reading this piece will provide you with a nuanced perspective on why the vast majority of the current industry efforts (vibe coding/designing, design to no-code, prompt to prototype) are doomed to fail.

For those of you that are already identifying those "glitches in the matrix", this piece will bring you some "Aha" moments.

[Important update, Dec 4th 2025] We released a first version of our MCP Server. Feel free to browse this folder with raw footage. At least a dozen videos showcase different scenarios. My favourite ones are 03, 04, 06, 08 and 12.

Framing the challenge: UI Design is particularly hard for genAI

Let’s start with the obvious. UI Design is a hard problem. This stems from the fact that a UI design is both a (non-sequential) visual representation and a (sequential) code one, and they are both strangely coupled.

The visual representation relies on our brains to interpret a highly sophisticated symbolic language (icons, emojis, intent, calls to action, words, etc) blended with spatial relations (contained in, adjacent to, aligned with, etc) and stylistic relationships that reify the language (similarity of hue, border, shape, etc). At the same time, the code rendering is a sequence of text instructions for design tools or user devices to interpret them and produce the intended visual output.

But here’s where things turn out to be even more complex. A visual hierarchy between two elements doesn’t need to match an internal code hierarchy between those two same elements (if they happen to be, in fact, matching elements, or just elements to begin with). Operating at a symbolic language level, a header structure visually containing child elements doesn’t need to show an identical hierarchical relationship when looking at its code representation.

See this example. The visual "card" on the left expresses opinionated hierarchies and relationships. Would you consider the "fav" icon to belong to the picture or to the card as a whole instead? Or which one, the picture or the name of the place, would you think is the single most important piece of information? Interestingly, the code section on the right follows its own rules, which a trained eye might assess as being "not the obvious first choice but definetely smart for tactical reasons".

This dual nature of a UI Design is often referred to by the Penpot team as the “light dilemma” (no pun intended) because it also has a split personality. Light can behave both as particles or as a wave. You will find it more particle or wave-like depending on the situation, but it is still endowed with its double nature, just as UI is primarily code or visuals depending on who is interacting with it and to what end.

If you feel more comfortable with an analogy from the philosophy of language, consider that this very text creates, as you read, highly symbolic representations in your brain (some of which we call thoughts). In this sense, you could consider human language (oral or written) as the sequential representation of thought, which strongly feels not bound by a “sequential” structure.

We shouldn’t push these metaphors (particle-wave, language-thought) much further than this but we hope to have expressed that the visuals-code duality of design is not unlike the relation between thought (parallel) and language (serial), and just as constitutive of it as the particle and wave nature of light.

Interestingly, the challenge of understanding UI Design doesn’t stop at the dual representation level of UI Design. These representations are themselves the result of a process. And a design process is fundamentally non-linear in its iterative artifact generation. So, not only we have to deal with a dual-artifact representation that obeys two different sets of rules (cognitive/storage, parallel/sequential, visual/code) but we need to take into account the dimension of time as well.

In other words, AI assistance should be granular enough to be interwoven with the process, not remaining just as a sequence of human-AI hand-offs.

The good, the bad and the ugly: The current industry efforts are a mixed bag of misconceptions, worthwhile approaches and outright false promises

Let's take a look at the current engineering zeitgeist. We'll go in reverse order.

The ugly: genAI powered Design-to-No-code workflows

This approach starts with a UI design already crafted in a UI Design tool. Then, through UI Design extensions or third-party plugins we take one or many parts of the UI design and attempt to convert them into functional websites or apps that make those designs come alive with very little intervention from the designer and zero developer participation.

Recently released Figma Sites is a good example of this archetype. Figma Sites is a product within the Figma Suite platform that allows users to design, prototype, and publish websites directly from Figma.

You are supposed to use Figma just like you always do, and when you’re ready, you simply hit “publish” to turn your designs into a live website hosted elsewhere. In theory, Figma infuses logic into the designs for an overall seamless interaction.

The fundamental limitation of this approach is that Figma, and other design tools à-la-Figma or conceived during the 2010s, continue to define a UI design mostly using an imperative design paradigm. They have prioritised appearance over structure. This means that these tools have built a hyper-controlled WYSIWYG environment full of visual hacks and ad-hoc logic that seems to disregard some quite basic software engineering principles.

An example of this is how Figma’s Autolayout treats absolute positioning of its contained elements. The developer can’t trust what they see in the layers panel and has to rely instead on Figma’s own Dev Mode output. Dev Mode is able to reinterpret relative positions based on those absolute positions but at the cost of having two sources of truth: design and code are disconnected. As one of the Penpot designers likes to put it “Design says ‘car fast red’ and Dev Mode outputs ‘el coche rojo es rápido’ but couldn’t we just have said what we meant — namely “the red car is fast”?

If fidelity to visuals instead of fidelity to intent hadn’t been prioritized, we wouldn’t have experienced so much hand-off drama between designers and developers in the first place.

Unfortunately, the same lost-in-translation pain that continues to plague design hand-offs won’t go away with this new automagic pipeline. Sure, there may not be developers around to complain about code quality, but other than gimmicky effects and simple flows on “landing-page world”, designers should be ready to be disappointed most of the time.

Given that fine-tuned brute-force technologies guzzling gargantuan loads of money and training datasets have taken everyone by surprise, it’s hard not to expect that even more of just that will be able to crunch any neighbouring problem. However, at Penpot we predict that this LLM-based approach based on imperative design principles might fail to address the genuine problems of scaling design and efforts should be directed elsewhere.

“Your results may be non-deterministic because AI” Dylan Field, Figma CEO FigmaConfig 2025.

The Penpot way: Go Declarative Design instead of Imperative Design

Penpot, by contrast, offers a much stronger source of truth for design-to-code (and its related no-code and low-code siblings) efforts because it already runs on a code-first paradigm beneath its UI design interface.

This is the Declarative Design approach. The quest to fully represent visual design as a language is still ongoing, but Penpot is definitely on the right track by being CSS-honest. Moreover, since it’s open source, hackers and builders can contribute to this collaborative endeavour too.

The benefits are tangible and have been demonstrated by Locofy: not only their premier AI design-to-(real)code tool works much more reliably with Penpot — it also took them a fraction of the time to develop!

The bad: genAI-powered code to prototype

This approach neglects the design tooling completely since it wants to go from prompt to a hi-fi working prototype as fast as possible, blending in both the design and the coding processes.

The intended audience is mostly developers and tech-savvy people that tolerate tinkering with huge chunks of semiworking code. We’re also seeing some technical designers that are not afraid of looking at code and exploring other ways of bringing their ideas to life.

“We know that we won’t be able to satisfy the expectations of people building complex software with our LLM-based prompt-to-code product, but we are prisoners of this hype cycle. Also, please don’t ask me about the churn.” Unnamed high-ranking executive at one of the industry leaders, 2025.

Cursor, Lovable, Windsurf or v0 are examples of this (often vibe-driven) approach.

At Penpot, we believe that this might be an interesting approach for fast prototyping ideas and concepts, making some of the mockup & prototyping features found in tools like Penpot less necessary for specific use cases. We also believe it can provide you with a more opinionated starting boilerplate code to start development from.

However, we have observed two very obvious shortcomings.

The first issue is that rapidly going from prompt to prototype and, subsequently, from prototype to final product, is hostile to a collaborative workflow. The mesmerizing and empowering feeling of being able to “build and ship” remains, however, a conversation in an echo chamber. Insisting on going beyond “solo-mode” is very likely to bring a sense of constant wasteful conflict to the team. This limits what you can actually build.

The second shortcoming is even more fundamental. The training datasets that enable LLMs to manipulate designs-as-code are the final result that made its way into publicly scrapable websites, oftentimes quite far removed from the original, intended design, let alone the process leading to it. You’re copying from a lossy copy, that is in turn made up of a dominant visual narrative that might not be your cup of tea.

There are better ways to honour Jakob's Law's of similarity than just rushing to the bottom.

If we think about it, it’s quite ironic that the traditional lost-in-translation design-to-code handoff is now inspiring new designs, perhaps further exacerbating this cursed telephone game.

So, if you’re building “solo”, technical debt is somebody else's problem and polished, cohesive user experience is not a priority, go for it. Otherwise, reserve it for rapid prototyping of mockups.

The Penpot way: Challenge whether pursuing full-featured prototyping capabilities for Penpot still makes any sense

Historically, UI Design tools needed to cover the “interactive mockup” bit of the whole Design Process conundrum. These days, perhaps those extremely demanding product team efforts could be better directed elsewhere. In other words, if an interactive prototype can be inferred by AI by just looking at the designs and their structure, then presenting a good enough prototype to stakeholders could get you 90% of the way already. If we confirm this with our ongoing research, we will probably make big changes on our interactive prototyping capabilities roadmap.

The good: Agentic AI and MCP servers

Let’s switch gears a bit while still borrowing some ideas already expressed above. As people and companies started creating on top of the building blocks of genAI, it was soon understood that connecting different pieces of software through an equivalent of a universal bus protocol would get us to the next level. This is similar to what happened when we went from memorizing bus routes to just asking an agent (Google Maps et al.) to do that work for us, to finally go on building the mashup app ecosystem back in the days of web 2.0. We’ve seen this before and it makes total sense.

“genAI is now at the Trough of Disillusionment, so we recommend that you switch to Agentic AI, which still hasn’t arrived at the Peak of Inflated Expectations of our Hype Cycle” Daniel Sánchez Reina. “Expansion Strategy, Leadership Vision & Buyer Priorities” Gartner Event, Spain, 2025.

What is particularly interesting is how easy MCPs seem to make your app capabilities available through this new genAI-friendly API. If you have a well-documented traditional API, you can easily build your own MCP server and let any AI agent connect to it and operate really quickly and autonomously.

From the perspective of a UI Design tool, this opens up the possibility of a glorified “design by command line” approach. If your API is comprehensive and well built, you could, in theory, welcome agents to be your design co-pilot (or even the pilot).

If all this is true, why are we then confronted with underwhelming results? MCP servers that allow you to “interact” with design tools seem unable to produce cohesive and sustainable designs beyond the first few iterations. The answer to this lies again in how traditional UI design tools have built their internal design structure, lacking taxonomical and semantic value, making it almost impossible for an LLM to identify meaningful patterns that have to remain consistent across multiple iterations.

There are honourable attempts at using UI components (read Design Systems) as a reliable meso-structure, whether they are the intended outcome or the qualified input of such prompts. However, these UI components found in traditional UI Design tools are usually articulated following the principles of imperative design, arbitrary layers/design primitives and hard-coded property values; a combination that echoes the first bit of the old adage “garbage-in, garbage out” to which not even almighty LLMs can escape from.

The Penpot way: Design Tokens to bring intent to design

Penpot was born in the early 2020’s, when complex design systems were conceived to address the ever-growing demand for better software design. The Design System honeymoon phase is almost over now because current tools’ inability to deal with a complexity orders of magnitude bigger has led to the “When did UI Design become boring?” drama.

True, Design Systems brought a way to standardize how you build apps at scale, but without an extra layer of semantic design guidance, it remains a Duplo instead of a Lego.

Thankfully, we have Design Tokens. They represent small design decisions encapsulated in tiny bits of code that you can use, reuse and combine to create more intentional design decisions. A design token can be as obvious as a color, but it could also be a border radius or an opacity. There are many design token types but most complex designs will usually use just a dozen. For instance, you can define a spacing design token (ie: padding) as three times a dimension design token that is also referenced across a whole multi-brand identity theme. Changing a design token has automatic ripple effects. That is why some people have referred to this as parametric design. Whatever you call it, it unlocks true adaptative UIs.

With the help of design tokens, we’re closer to delivering on our promise of making Penpot deal with complexity so you can focus on creativity.

The good news: they are all expressed canonically as code thanks to a W3C DTCG draft standard. It took a lot of courage two years ago, when we started this journey with the folks at Tokens Studio, but today Penpot is the only UI Design tool to support Design Tokens natively. The even better news: LLMs couldn’t have dreamt of an easier way to identify the design decisions that are invariant to an iterative design process.

The wildcard: Computer use

There's a recent and emergent (perhaps underrated?) approach: computer use. Anthropic pioneered this a while ago, and now Manus has a computer use agent. ChatGPT recently has one as well, and there are many other less-known commercial and open-source solutions.

It might be that AI agents could eventually be able to use computers and learn patterns of designer behavior really well, but at Penpot we're unsure whether this is the smartest way to use computer power. It feels to us that UIs should focus on a human-centric HUD paradigm and give AI agents a much more native high-bandwidth data-centric environment.

Penpot’s AI roadmap: in-app ML + Agent eXperience + open file format

As we continue to build Penpot, we want to make sure that we can finally unlock the next logical step in collaborative software-building; designers and developers (and everyone and everything in between) finally working together.

Declarative design principles brought Penpot a rules-based visual language inspired by CSS. Design Systems and Design Tokens brought us scalable semantic design structures. Finally, our own open .penpot format means that every Penpot file is a zip file full of JSON files and visual assets. If we really want to build the next era of software building, we need to see both design and code as equally important. Hence our “Design as Code” motto.

In practical terms, though, we have decided to focus on three major areas. We will share the most important aspects of them and leave bigger announcements for a later date.

In-app task-oriented ML capabilities so users can speed up their creativity

If we widen the focus a bit from the presently familiar genAI tools, we find in machine learning at large an array of algorithms to help automate repetitive tasks, tame complexity and generally bring more structure into design with less work. With a task-oriented focus, we can adaptively prioritise algorithmic properties such as interpretability, reproducibility, controllability, calibrated uncertainty, data efficiency, inference latency, or privacy.

The best tool is the one that weighs light on the hand and disappears from your brain, so that you feel one with the task.

For instance, Penpot should be able to predict with high confidence when you might be reinventing the wheel by creating a new button, especially when one of the design libraries you’re using already has a matching candidate. Asked about that possibility, you could autocomplete (perhaps just hitting the tab key) and accept the suggestion or reject it and let Penpot know that this should become a new button component once it’s finished.

It’s no surprise that structured design data —like design systems with components and variants, and design tokens— are perfect for serving as a “behind the scenes” safety net. We believe that our in-app aids won’t only speed up the design process but also encourage designers to be bolder and more creative, knowing that Penpot handles all the low-level complexity while keeping intentional design user-centric.

We are exploring three specific AI tasks: componentisation (with or without an a-priori component), layer structuring and design token identification.

What we like about this line of work is that it focuses on the decision-making process, and there’s potential to contextually adapt our assistance to how different users work. This divide and conquer approach leaves room for an extremely cost-effective personalised aid. What's undoubtedly a challenge is to provide our AI task with a clear status + direction of intent that is aware of the previous steps. This "3-part context window" has to be fine-tuned for every AI task.

Penpot MCP Servers so that our community unlocks the platform’s true potential

Since launching our Penpot alpha, we’ve received constant praise for our vision and groundbreaking product.

People around the world marvel at what we have been able to achieve in so little time. However, it’s impossible for us to build absolutely everything around the Penpot ecosystem (and, to be frank, we don’t want to either).

LLMs have a hard nut to crack with design for all the reasons above. But given the phenomenal progress in the ecosystem (models + tooling), there is a good chance that, with the right tools and mindset, they will delight and help us in unexpected ways.

With our enthusiastic community, at Penpot we have a better chance than anyone else to discover how and where LLM can best help the design process. This is why we have decided to provide an official Penpot MCP server to give everyone the ability to interact with a powerful and reliable design source of truth in ways we can’t even imagine at this time.

One example of what an Penpot MCP server of the calibre we're envisaging could unlock would be to make it easier to channel back production data about how your software is being used. From the simplest A/B test to more nuanced insights, this could allow an AI agent to surgically refactor bits of a design system to better accommodate it to relevant emerging scenarios. Data and heuristics encourage a bolder design process and we want our Penpot MCP server to unlock a full-duplex design-production communication.

In this sense, it’s crucial that our MCP server exposes our design primitives, plugins API, declarative design options, design system capabilities, design tokens and semantic inference aids in a way that you can really speak the “design’s code”. We’ll provide the most configurable MCP server we can, so that you don’t need to create one (and support you if you still want to).

So, whether you’re building new products and businesses on top of Penpot or connecting Penpot to your software building pipeline, watch out for this when it’s released.

In the meantime, you can try some worthwhile community efforts like this one.

[Important update, Dec 4th 2025] We released a first version of our MCP Server. Feel free to browse this folder with raw footage. At least a dozen videos showcase different scenarios. My favourite ones are 03, 04, 06, 08 and 12.

Penpot .penpot open format

Did you know that when you download a Penpot file using the .penpot format, you are downloading everything in a zipped file containing folders, JSON files and visual assets (SVGs, JPEGs, etc)?

This was released with Penpot 2.4 in January 2025 and though its potential wasn’t immediately made obvious, more and more people are starting to see the .penpot file format as the Penpot’s file-based API.

Two features in the works will allow for changes made in your local file-system to update the original Penpot instance file. With persistent universal references, updates will naturally cascade out to dependent files. This opens up the possibility to git-version designs (e.g. together with synced code), and seamlessly switch back and forth between sophisticated visual edits in Penpot and local text-based powertools (AI or AI-less).

With Penpot, you should be able to choose the best tool for the job, just as you can self-host it or use our SaaS.

Next, we show a Penpot component library where we want to modify a couple of properties for a button component as well as library-wide typographies. We download that file as a .penpot file, edit a bunch of internal JSON files and re-upload it using the API. Penpot makes sure to preserve inter-file dependencies so that a design using the modified library is also updated.

At Penpot, we predict that bigger and more complex designs and design systems will emerge from the possibility of dealing with automated processes that were previously unthinkable. Imagine your current designs times 10 or even 100. This is also why we are working so hard to bring you a completely new Penpot rendering engine, built from scratch and ready for the avalanche of the next generation of UI Design work. We don't have a due date but the Open Beta is expected for end of September.

Final remarks

As an open-source company and product, at Penpot we believe in the social contract that establishes that collaboration is both the right thing to do and the smart thing to do. This requires extreme doses of transparency and trust as well as a resilient ecosystem of users, contributors, companies and organisations.

The same applies to Penpot’s AI vision.

In the next few months we will be releasing more ways to make our nuanced perspective a reality for everyone, respecting a sacred rule chez Penpot; we build in the open, you remain in control.